Estimated read time: 2-3 minutes

This archived news story is available only for your personal, non-commercial use. Information in the story may be outdated or superseded by additional information. Reading or replaying the story in its archived form does not constitute a republication of the story.

SALT LAKE CITY -- University of Utah researchers recently discovered a way to decode words from brain signals. In other words, the brain speaks.

This breakthrough study, published in this month's Journal of Neural Engineering, is an early step to enable severely paralyzed people to speak with their thoughts.

As we speak, our brains signal our mouths to make words. Bradley Greger, an assistant professor of bioengineering at the University of Utah, set out with his research team to decode spoken words using only brain signals.

"That's what we really need to know: what are the patterns of neurosignals that correlate with the spoken words?" Greger said.

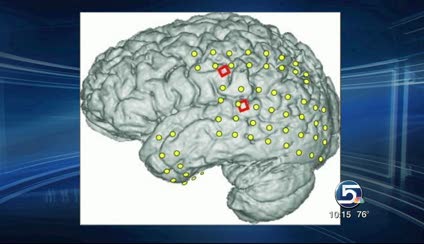

A special micro-electrocorticographic (microECoG) imaging device translated brain signals into words. It used two grids of microelectrodes implanted beneath the skull, without penetrating the brain.

"Since it's such a small grid, we can place it precisely over the area of the brain that controls speech," Greger explained.

Words originate as patterns of electrical activity in the brain before they are ever spoken. The researchers tapped into that on a volunteer patient and discovered information processed on a microscale.

"There really is information at that scale, the microscale, from the surface of the brain," Greger said.

The scientists recorded brain signals as the patient repeatedly read 10 simple words, like "yes" and "no," "cold" and "hungry."

Examining the brain signals, the team picked the correct word 28 percent to 48 percent of the time.

That's better than chance, but not good enough for a device to translate a paralyzed person's thoughts into words spoken by a computer.

Essentially, what they validated is that there is information at that level, and speech can be extracted from it. So now they're moving on to the next generation and hope to come up with a device that the patient can use to communicate with words.

"What we need to do now is scale this device up a bit, not in terms of the size of the electrodes, but the number of electrodes so we can cover a larger area of the brain and get more information out," Greger said.

If patients speak the words the same way each time, Greger says, they'll also get more accurate results.

Soon, the researchers hope to do a feasibility study on translating brain signals into computer-spoken words.

"That will give us, we hope and we think, the tool to really enable people to have restored communication," Greger said.

If accuracy improves, a communication device could quickly follow.

E-mail: jboal@ksl.com